Playing a Game Against an AI oponent

Introduction

In this post, I will show I trained a reinforcement learning (rl) agent as an opponent in a fast-paced christmas-themed game. I won’t go too much in the details of the algorithm used and concentrate more on how to build a game which integrates trained rl agents you can actually play against.

Unity and more specifically Unity Machine Learning Agents Toolkit is used to create both the game scenes (currently two different levels) and the training environment. For training the rl agents, the Proximal Policy Optimzation (PPO) algorithm is considered.

To watch the final result, I included a clip of me playing below (the player is the green ball and the opponent the red one).

To play the game yourself, you can simply proceed to the Download section of this post and install either the provided mobile or desktop version.

All of my code is available here (both the Unity game and Python training files).

Rolling Balls of Christmas

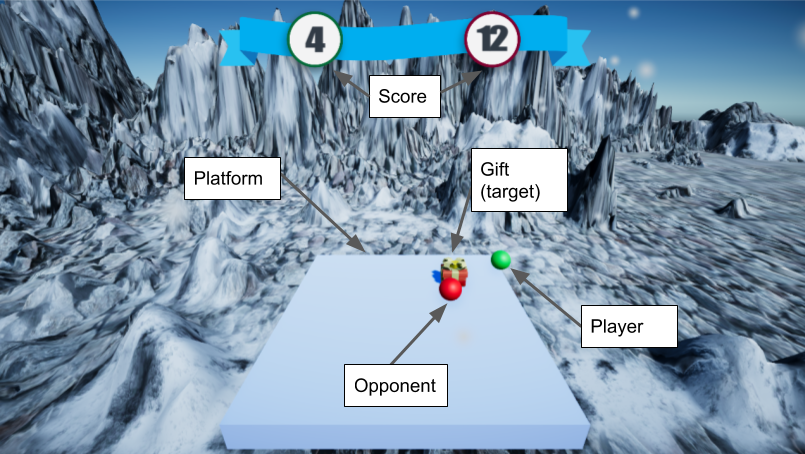

The rules of the game are simple. Both the player and the opponent control a ball sitting on a square platform while a gift (the target) is also located on it. Each time either the player or the opponent reaches the gift it gets one point. Inversely, each time a ball falls off the platform the respective opponent gets one point. The first one to get to 20 points wins the game. Each level the opponents gets better. The main components are summarized in the image below.

The game is inspired by Unity’s Making a New Learning Environment but modified to allow us to play against our trained agent.

Training the Opponent

In its simplest form to train the opponent we need three things:

- A reward signal which will indicate what is the task (i.e. goal) the agent has to perform.

- An environment which, for each state and action of our agent, will yield its next state.

- Finally, an algorithm which will combine 1. and 2. to optimize the policy of our agent and maximize the expected cumulative reward.

In order term, our training environment needs to define the states, actions and rewards that define our reinforcement learning problem.

For the states, it consists of eight numbers at each timestep:

- The relative position in X and Z of the agent with respect to the target (two numbers)

- The distance between the agent and the edges of the platform (four numbers)

- The agent velocity in X and Z (two numbers)

Two continous actions are allowed: moving to the left and moving to the right.

The reward is defined in such way:

- A negative reward of 0.05 at each timestep

- A negative reward proportional to the distance of the agent from the target

- A large negative reward of -10.0 each time the agent falls off from the platform

- A positive reward of 1.0 each time the agent reaches the target.

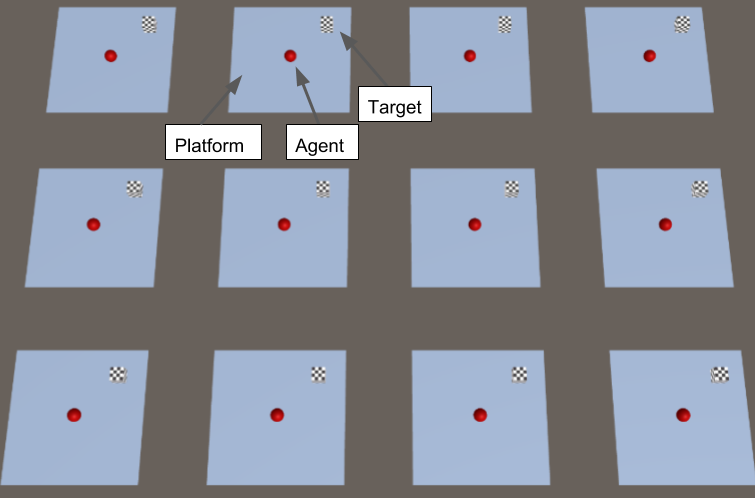

Finally, the training environment is illustrated below. Note that to speed up training twelve parallel identical environments were used.

The training of the agents using PPO is shown in the following clip. For more details on the hyperparameters and neural network architecture considered refer to the github page of the project.

As observed, the agent learns very fast to move quickly to the target location. By the way, each “lag” in the video corresponds to a training iteration of the algorithm.

Creating a Game

The game consists, at the moment, of two different level. To obtain a reasonable level of difficulty for the player, the acceleration rate of the opponent had to be reduced by a factor of respectively 10 and 5 for level one and two. This explains why sometimes, especially for the first level, the opponent seems to barely miss the target. Because, during the training phase, it learned to compensate its position for a specific acceleration rate different than the one it is actually playing.

I think that this behaviour yields to a more realistic gameplay where you don’t have the impression of playing against an opponent that only follows a straight line to the target.

Download

At the moment, the game is available for Windows & Android.

- Link for Windows. Extract the zipped folder & double click on the .exe to play.

- Link for Android. Download to your device and install to easily add to your apps.

To move the ball on Windows use either the arrows or the W (up), A (left), S (down) and D (down) keys. On a mobile device use the provided control at the bottom right of the screen.